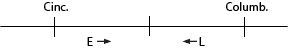

At 12:00 noon, Lunis started driving from Columbus to Cincinnati at a steady 40 mph. Fifteen minutes later, at 12:15, Ethan started driving from Cincinnati to Columbus at a steady 50 mph. The two cars passed each other at a point midway between the two cities, as a high-flying drone observed. Using this information, calculate the distance between Columbus and Cincinnati. (Is this distance correct?)

Solution

Distance equals rate times time. At the moment when they meet:

Thus they meet after 1 hour and 15 minutes, and the distance each drove is: miles for Lunis, and miles for Ethan. According to the problem, Cincinnati and Columbus are 100 miles apart.

According to the atlas, they're actually 109 miles apart. How fast were Lunis and Ethan really going? Or is it just that the road is not straight?